Multiple choice, objective response or MCQ is a form of an objective assessment in which respondents are asked to select only the correct answer from the choices offered as a list.

Strength of Multiple-Choice Questions (MCQs)

- MCQs can be written to assess various levels of learning from basic recall to application, analysis, and evaluation.

- MCQs can be written to assess a variety of topics across any discipline.

- MCQs are not susceptible to scorer inconsistency sometimes seen with open-response questions.

- MCQs can allow for a broad representation of course material, increasing validity.

Challenges with MCQs

-

-

- MCQs are not as easy to write as they appear to be - careful wording is crucial to avoid misinterpretation.

- MCQ Database development is necessary since students may obtain questions from previous semester exams.

- MCQs may cause anxiety in some students.

- Depending on how a question is written, you may give the answer away!

- MCQs should assess both lower- and higher-order thinking; these can sometimes be difficult to develop.

-

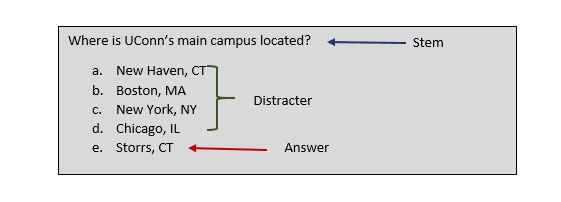

A multiple choice question (MCQ) is an assessment item consisting of a stem, which poses the question or problem, followed by a list of possible responses, also known as options or alternatives. One of the alternatives will be the correct or best answer, while the others are called distracters, the incorrect or less correct answers.

Stem:

The stem should be able to stand alone as a short-answer question without the alternatives. The stem should either be in a question format or completion format, but the question format is typically recommended by experts. When using the completion stem, the blank to be answered should always be at the end. The stem should always include a verb.

Distracters:

Distracters are intended to offer feasible but inaccurate, incomplete, or less accurate answer options that a student who does not know or has an incomplete understanding of the material may select. Typically, a question will have 3 or 4 distracters. Research suggests that more than 4 distracters provides little benefit. Less than three distracters improve the odds of guessing. All distracters should be homogenous in content, form, and grammatical structure.

Each distracter should be unique and plausible. If no one ever picks a distracter as the answer, it is not a good distracter. One distracter should not be rephrased to create another distracter. Avoid using synonyms or a similarly spelled word as means to create a distracter.

Reducing guessing

Students have developed techniques for guessing correct answers. Here are some common guessing rules of thumb and how to defeat them:

| Test-Wise Technique | Defeating the Technique |

| Pick the longest answer

|

Make sure the longest answer is correct about 25% of the time if there are 4 alternatives or make all answers the same length |

| Pick the “b” option

|

Make each answer option the correct one an equal number of times |

| Never pick an answer with “always” or “never” in it

|

Make sure answers with “always” and “never” are correct as often as they are incorrect or avoid using these words |

| If two answers are express opposites, one is the correct answer | Offer opposites when neither is correct |

| If in doubt, guess

|

Include at least 3 or 4 distractors

|

| Pick the scientific-sounding answer | Use scientific-sounding jargon in incorrect answers; make the simple answer correct

|

| Pick a word related to the topic | Use terminology from the topic in distractors too. |

| Avoid picking any answer with grammatical errors | Use expressions like a/an, is/are, or cause(s) |

Additional resources:

-

- Chiavaroli, Neville (2017) "Negatively-Worded Multiple Choice Questions: An Avoidable Threat to Validity," Practical Assessment, Research, and Evaluation: Vol. 22 , Article 3.

- Ebel, R. L., & Frisbie, D. A. (1986). Essentials of educational measurement (4th ed.). Englewood Cliffs, NJ: Prentice-Hall.

- Haladyna, T. M., & Downing, S. M. (1989a). A taxonomy of multiple-choice item-writing rules. Applied Measurement in Education, 2(1), 37-50.

- Haladyna, T. M., & Downing, S. M. (1989b). Validity of a taxonomy of multiple-choice itemwriting rules. Applied Measurement in Education, 2(1), 51-78.

Quick Links

![]()

Consult with our CETL Professionals

Consultation services are available to all UConn faculty at all campuses at no charge.